Remote and local notifications are not at all a new thing. From the very day – the 17th of June, 2009, they were introduced, they have always been, arguably, the most favourite mechanism to deliver messages to the user. The messages has ranged from proximity alert to a store, new update to an app, directional instructions to illegal promotions.

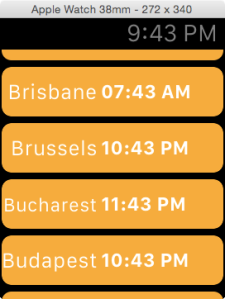

But in Apple watch, notifications take a new dimension. From the way the notifications are designed for Watch, it appears quite evident that Apple has spent considerable amount of energy to make them better and more meaningful. Following is the flow showing how a notification is presented to an Watch user.

- According to the Watch Human Interface Guidelines and WatchKit Programming Guide, when an app running in the iPhone receives a notification, iOS decides where to show the notification. Though this is kind of a vague statement, but as of now there seems to be no control over this even if we specifically need the notification to be shown in the Watch. Also, I could not find any information on how iOS decides to show the notification where it decides to show. Guess we’ll have to wait a bit for to know that.

- If the notification is sent to the Watch, the user feels a subtle vibration on his wrist or a mild audio cue based on the notification’s payload.

- Alarmed, as the user raises his hand, a very minimalistic screen is presented which is called Short Look interface. This is an immutable, non-scrollable screen that conveys the user the most important information about the notification. iOS designs them based on a predetermined template and present on the screen. This is Watch’s interpretation of your everyday notification, with just a title provided by you in the notification payload.

- All work and no play makes Jack a dull boy. Who understands this better than Apple? So, here is the shining playful part. The customisable, scrollable, actionable notification screen. After the Short Look notification is displayed, if the user continues to keep his hand raised (in the hope that something else will happen…soon…well..anytime now…), or taps on the Short Look interface the Long Look interface is displayed.

Apple has given you freedom within the aesthetic boundary to design the Long Look interface. You can add buttons and graphics and customise the behaviour when the user taps on it.

But what happens if you don’t provide the Long Look interface? Well, Apple has a backup plan. iOS displays a default interface with the app icon, title string and alert message. Tapping on it launches the app.

OK, so let’s not allow Apple to have all the fun and design our own Long Look interface!

A Long Look interface has three major parts —

- The Sash at the top of the screen — this includes the title string and the icon of the app

- The content — this is your playground. Add any graphics and buttons

- The dismiss button — this is always present, added by the iOS, and dismisses the notification once tapped

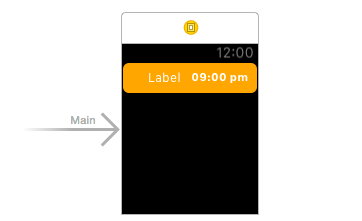

In the Sash section, as a third party developer, you have basic freedom. You can change the tint color and title of the notification.

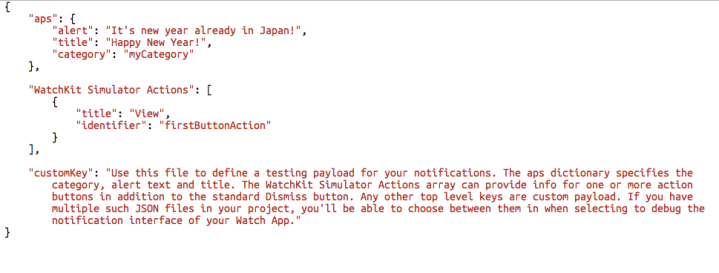

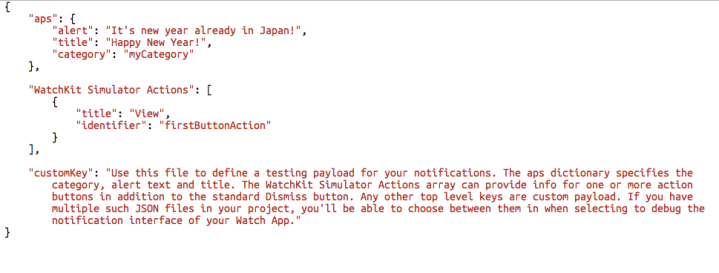

In the content, you have much more liberty. You can modify and design the label to show your notification message. You can add buttons, but not directly. All the buttons should come in a specific format through a JSON payload that will invoke the notification. The SDK already generates one such JSON payload file while creating notification scene for testing purpose.

Changing the alert, title and category controls what the notification screen will display.

Changing the alert, title and category controls what the notification screen will display.

As you see above, in the “WatchKit Simulator Actions” array is holding a collection of buttons in the form of dictionary which can be used to add/remove/modify buttons in the notification.

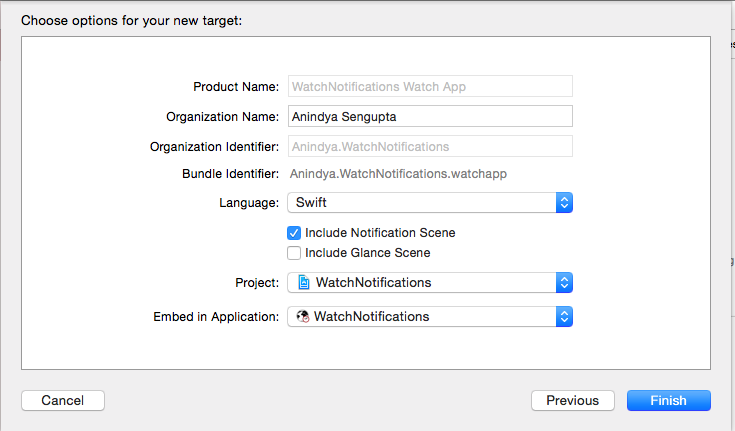

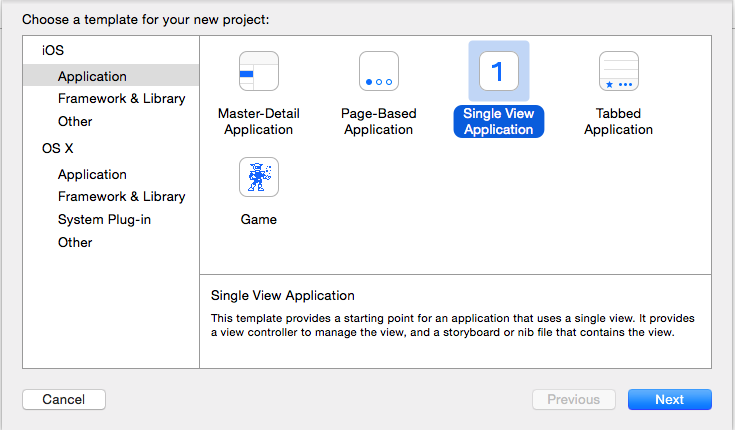

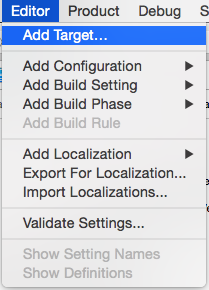

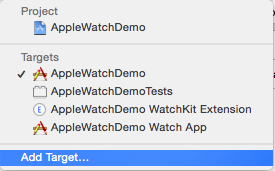

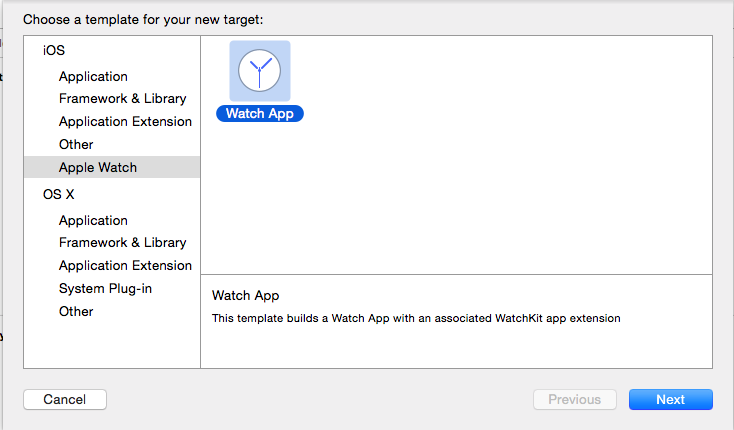

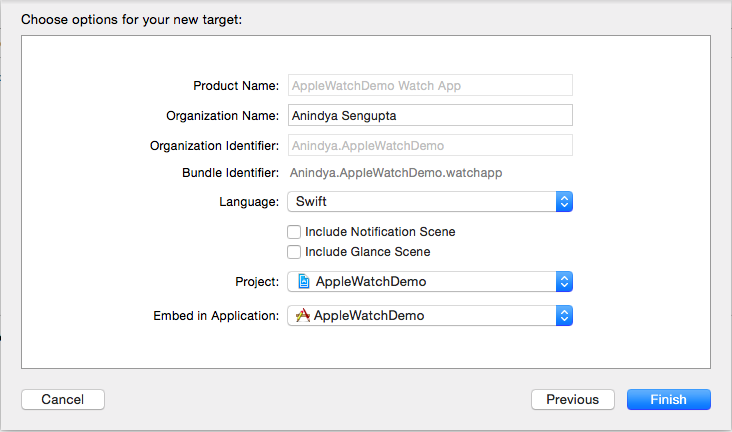

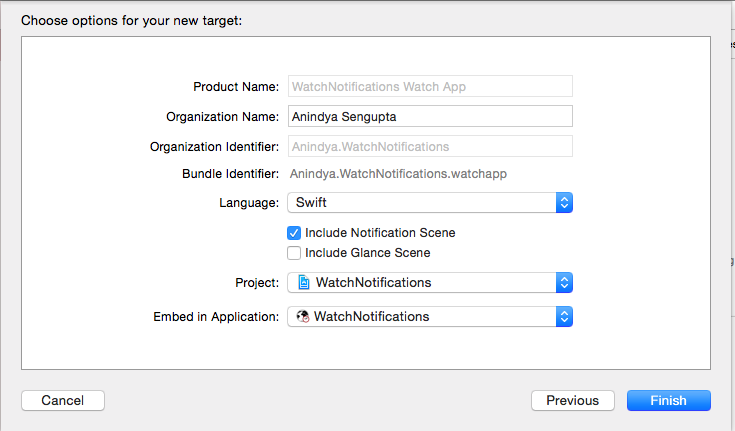

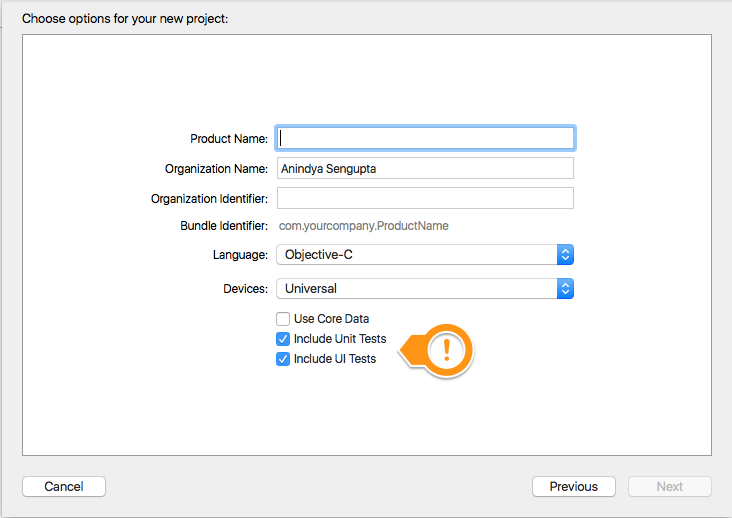

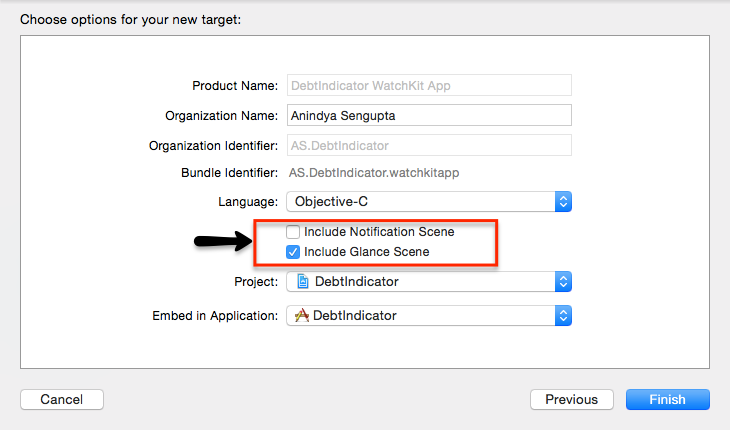

To create a notification, create a new project and add a new Watch Target as discussed in my previous post. This time keep the “Include Notification Scene” checkbox selected to include our notification interfaces.

Include all the necessary app icon images. Apple could not think of any more, so they want only the following few dimensions :

- 29 X 29

- 36 X 36

- 58 X 58

- 80 X 80

- 88 X 88

- 196 X 196

- 172 X 172

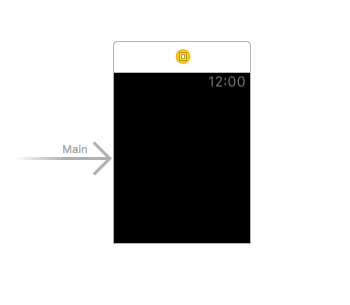

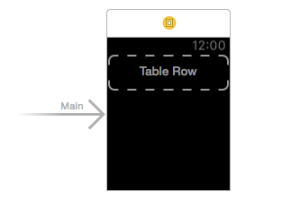

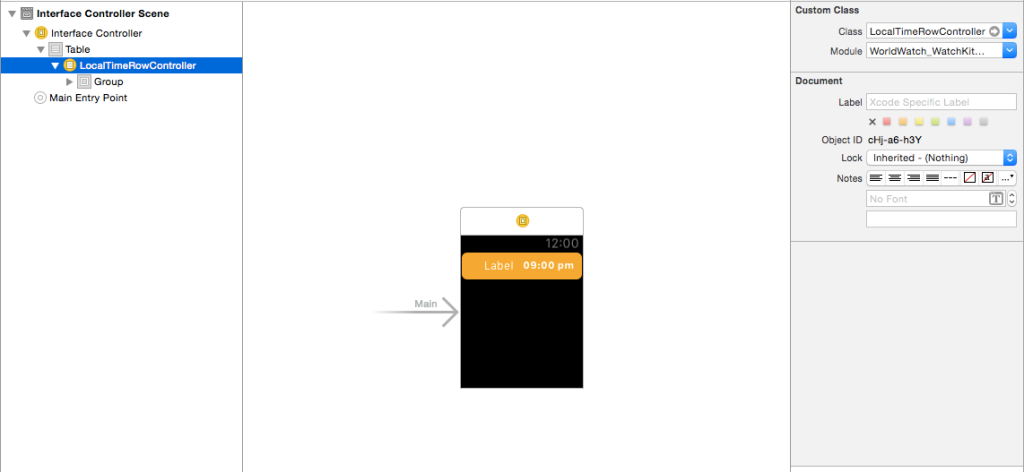

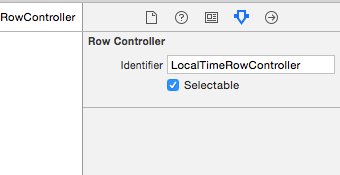

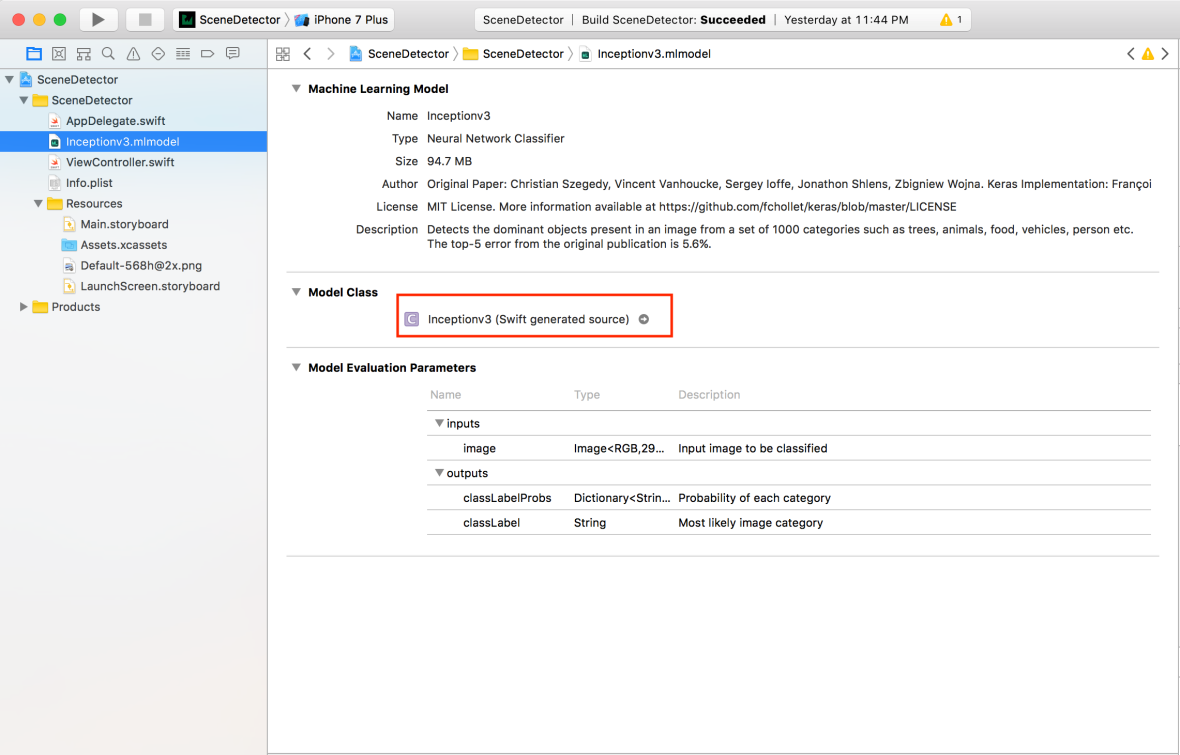

Xcode will generate two extra interfaces for you in the interface.storyboard (other than the usual screen for your watch app) inside your project. They are —

- Static Interface — A notification interface that can be configured design time. It is mandatory to keep this interface in your app bundle.

- Dynamic Interface — A notification interface that can be decorated with dynamic data in runtime. This is not mandatory.

When running the app, iOS first looks for Dynamic Interface. If not found it falls back to Static Interface. If the static interface suffices your n0tification requirement, it is safe to delete the dynamic interface. Also, it can be explicitly instructed not to show the dynamic interface.

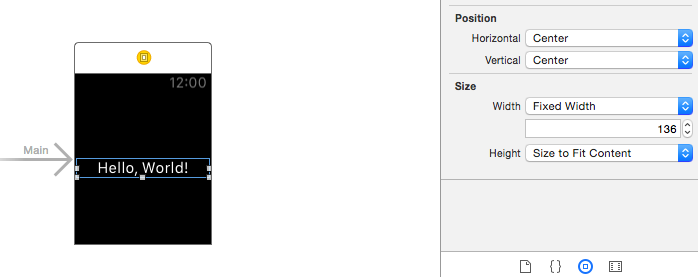

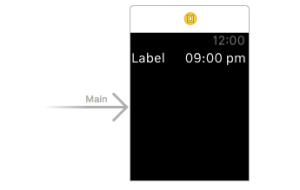

For the time being, lets change the Static Interface. What we are trying to do here is —

- show a notification stating that Japan is already enjoying the new year with an action button.

- Tapping on the “View” button the app will launch and

- display the current time in Tokyo

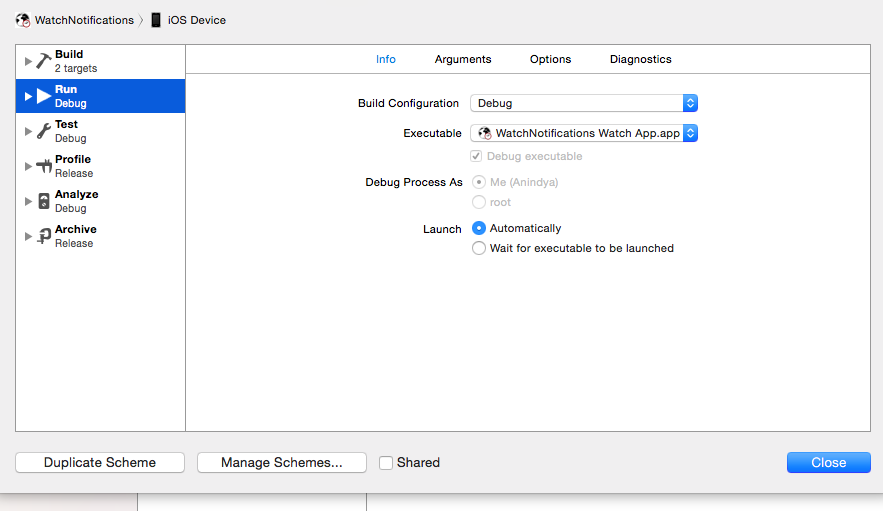

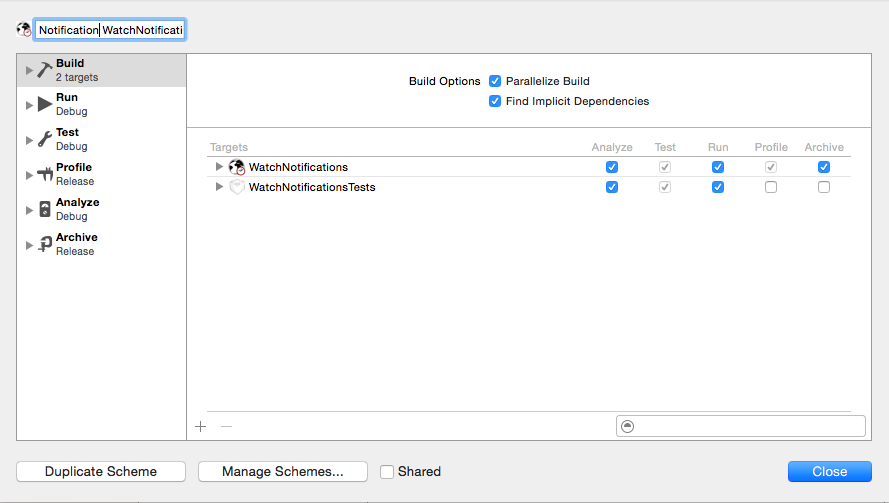

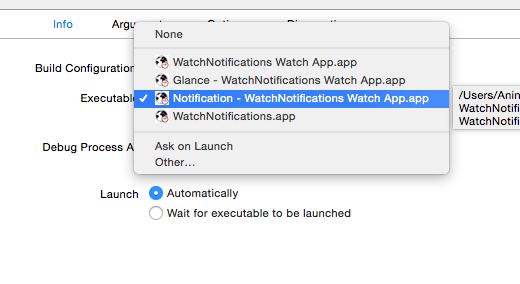

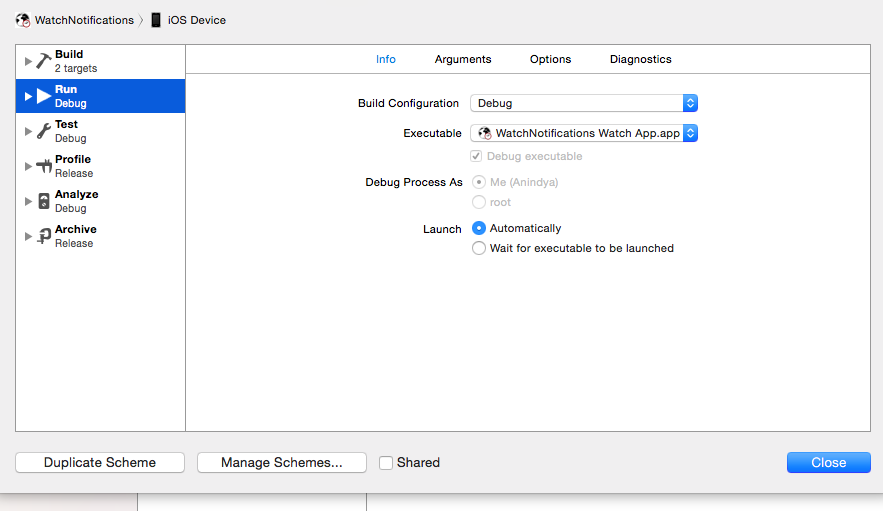

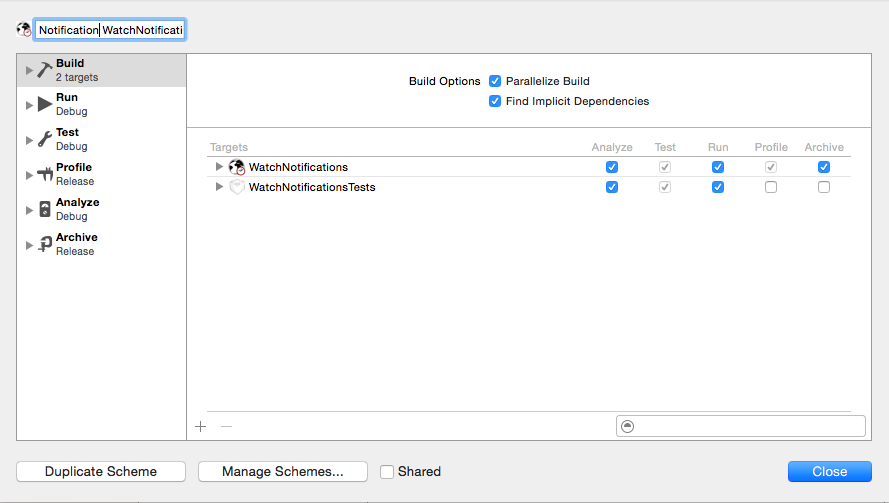

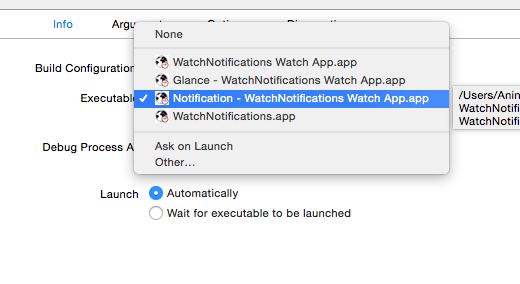

Now create a new scheme for notification and map the notification executable to that scheme to view the Watch notification in iOS simulator.

If you build and run your app now, the default static notification screen will show. Take into notice that the message text, button text everything is being pulled from the JSON file that is included in the bundle. you can try changing them to see if the change takes place in the notification.

Before we do some action, lets modify the JSON file to suit our needs by changing title, message and category. (Screenshot above) Lets name them as follows:

"aps": {

"alert": "Japan is already celebrating new year!",

"title": "Happy New Year!",

"category": "NewYear"

}

Also, in the Interface.storyboard file, select the “Category” under Static Notification Interface Controller and in the attributes inspector, change it to “NewYear”. Make sure that the category names are matching in the JSON as well as in the storyboard. Otherwise, the app will not build at all.

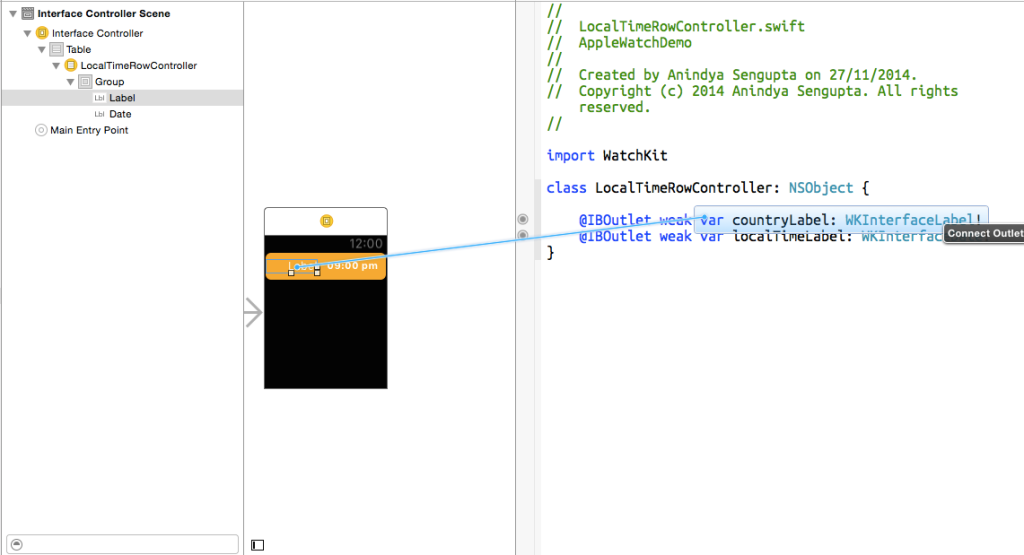

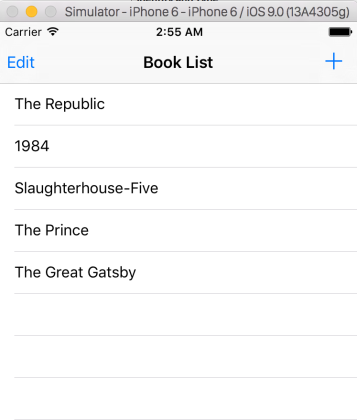

Now we want the user to tap on the button and make something happen. Let’s add a date label to the interface of the Watch app which will display the date based on the timezone set. Hook it to the InterfaceController as dateLabel.

Inside the Interface Controller, we can handle the notification like so:

@IBOutlet weak var dateLabel: WKInterfaceDate!

override func handleActionWithIdentifier(identifier: String?, forRemoteNotification remoteNotification: [NSObject : AnyObject]) {

if let id = identifier {

if id == "firstButtonAction" {

var plistKeys: NSDictionary?

var timeZones: NSDictionary?

if let path = NSBundle.mainBundle().pathForResource("Timezones", ofType: "plist") {

plistKeys = NSDictionary(contentsOfFile: path)!

timeZones = plistKeys!["TimeZones"] as NSDictionary?

}

if let dict = timeZones {

NSLog("%@", dict.valueForKey("Tokyo") as String)

dateLabel.setTimeZone(NSTimeZone(name: dict.valueForKey("Tokyo") as String))

}

}

}

}

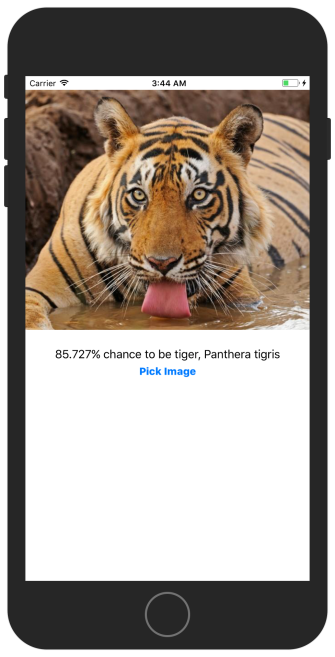

Now build and run the app. At first it will show your designed screen. Tapping on the dismiss button dismisses the notification screen. Tapping on “View” button shows Tokyo’s current time.

Right now, the app is showing the static notification screen. If you want to show a custom dynamic notification with data that can only dynamically be passed into the interface, we need to modify the “Dynamic Interface”. The Dynamic Interface is controlled by the NotificationController.swift. If you navigate there, you will find two functions commented out

override func didReceiveRemoteNotification(remoteNotification: [NSObject : AnyObject], withCompletion completionHandler: ((WKUserNotificationInterfaceType) -> Void))

override func didReceiveLocalNotification(localNotification: UILocalNotification, withCompletion completionHandler: (WKUserNotificationInterfaceType) -> Void)

Uncomment the

didReceiveRemoteNotification(remoteNotification: [NSObject : AnyObject], withCompletion completionHandler: ((WKUserNotificationInterfaceType) -> Void))

and make sure that the completionHandler is set to be .Custom

override func didReceiveRemoteNotification(remoteNotification: [NSObject : AnyObject], withCompletion completionHandler: ((WKUserNotificationInterfaceType) -> Void)) {

// Tell WatchKit to display the custom interface.

completionHandler(.Custom);

}

Now if we make any modification to the dynamic interface, you will see that the dynamic interface with its changes is being shown as the notification screen. This is because, as I mentioned earlier, iOS searches for the custom dynamic interface first. If it can not find one, then only it loads the static one.

Try changing the .Custom to .Default to see your static interface.

You can download the whole project from Github — https://github.com/an-indya/WatchNotifications

Hope you will enjoy building for Watch as much as I did. I will try putting in something more as I learn. Please do leave a reply and feel free to share if you like!

Hope this helps!

Changing the alert, title and category controls what the notification screen will display.

Changing the alert, title and category controls what the notification screen will display.